3. CCPP Configuration and Build Options¶

While the CCPP-Framework code can be compiled independently, the CCPP-Physics code can only be used within a host modeling system that provides the variables and the kind, type, and DDT definitions. As such, it is advisable to integrate the CCPP configuration and build process with the host model’s. Part of the build process, known as the prebuild step since it precedes compilation, involves running a Python script that performs multiple functions. These functions include configuring the CCPP-Physics for use with the host model and autogenerating FORTRAN code to communicate variables between the physics and the dynamical core. The prebuild step will be discussed in detail in Chapter 8.

The SCM and the UFS Atmosphere are supported for use with the CCPP. In the case of the UFS Atmosphere as the host model, there are several build options. The choice can be specified through command-line options supplied to the compile.sh script for manual compilation or through a regression test (RT) configuration file. Detailed instructions for building the UFS Atmosphere and the SCM are discussed in the

UFS Weather Model User Guide and the

SCM User Guide.

The relevant options for building CCPP with the UFS Atmosphere can be described as follows:

Without CCPP (CCPP=N): The code is compiled without CCPP and runs using the original UFS Atmosphere physics drivers, such as

GFS_physics_driver.F90. This option entirely bypasses all CCPP functionality and is only used for RT against the unmodified UFS Atmosphere codebase.With CCPP (CCPP=Y): The code is compiled with CCPP enabled and restricted to CCPP-compliant physics. That is, any parameterization to be called as part of a suite must be available in CCPP. Physics scheme selection and order is determined at runtime by an external suite definition file (SDF; see Chapter 4 for further details on the SDF). The existing physics-calling code

GFS_physics_driver.F90andGFS_radiation_driver.F90are bypassed altogether in this mode and any additional code needed to connect parameterizations within a suite previously contained therein is executed from the so-called CCPP-compliant “interstitial schemes”. Compiling with CCPP links CCPP-Framework and CCPP-physics to the executable and is restricted to CCPP-compliant physics defined by one or more SDFs used at compile time.

For all options that activate the CCPP, the ccpp_prebuild.py Python script must be run. This may be done manually or as part of a host model build-time script. In the case of the SCM, ccpp_prebuild.py must be run manually, as it is not incorporated in that model’s build system. In the case of the UFS Atmosphere, ccpp_prebuild.py is run automatically as a step in the build system, although it can be run manually for debugging purposes.

The path to a host-model specific configuration file is the only required argument to ccpp_prebuild.py.

Such files are included with the SCM and ufs-weather-model repositories, and must be included with the code of

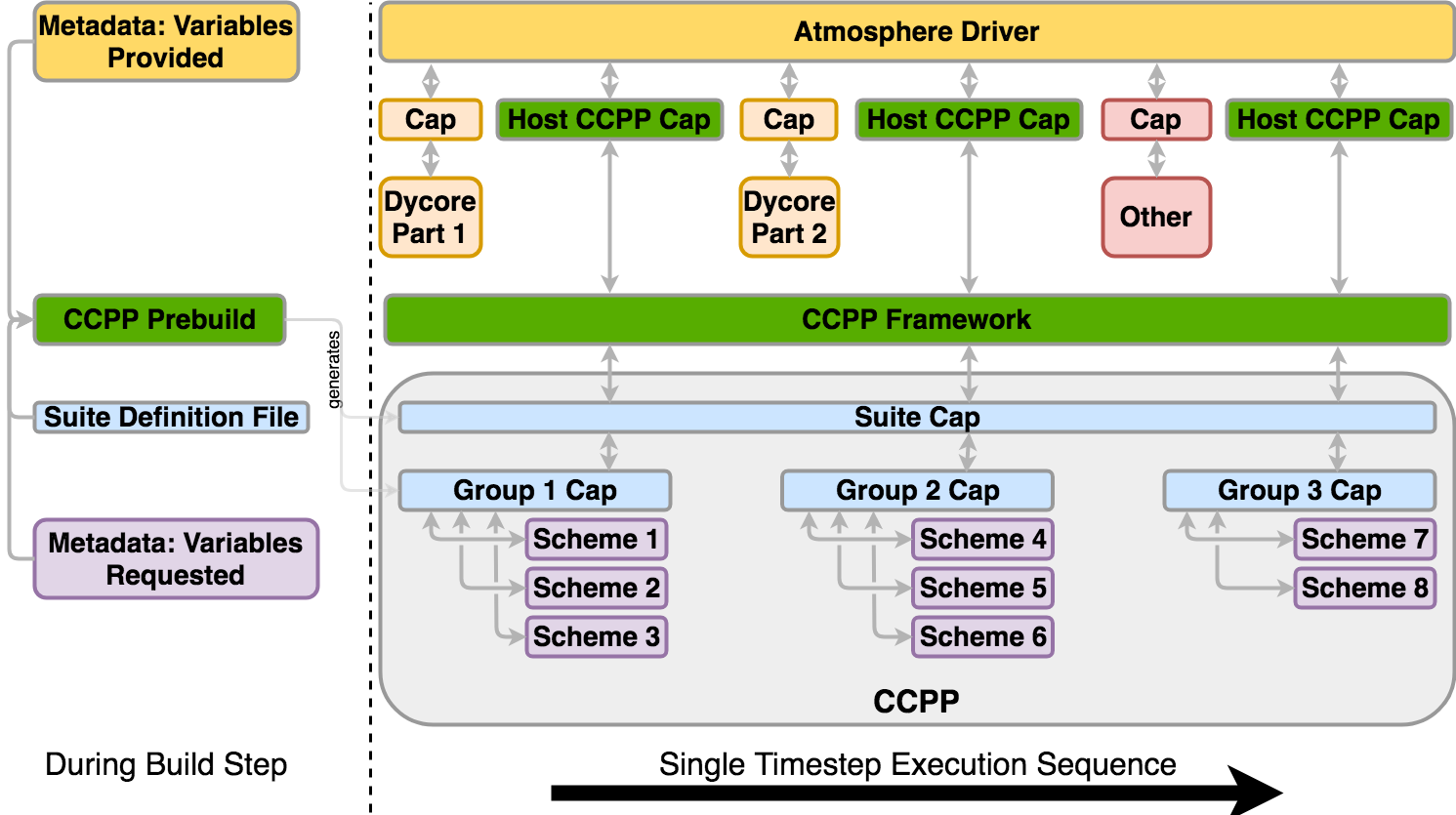

any host model to use the CCPP. Figure 3.1 depicts the main functions of the

ccpp_prebuild.py script for the build. Using information included in the configuration file

and the SDF(s), the script parses the SDF(s) and only matches provided/requested variables that are used

within the particular physics suite(s). The script autogenerates software caps for the physics suite(s) as a

whole and for each physics group as defined in the SDF(s). At runtime, a single SDF is used to select the

suite that will be executed in the run. This arrangement allows for efficient variable recall (which

is done once for all physics schemes within each group of a suite), leads to a reduced memory footprint of the

CCPP, and speeds up execution.

Fig. 3.1 This figure depicts an example of the interaction between an atmospheric model and CCPP-Physics for one timestep, and a single SDF, with execution progressing toward the right. The “Atmosphere Driver” box represents model superstructure code, perhaps responsible for I/O, time-stepping, and other model component interactions. Software caps are autogenerated for the suite and physics groups, defined in the SDF provided to the ccpp_prebuild.py script. The suite must be defined via the SDF at prebuild time. When multiple SDFs are provided during the build step, multiple suite caps and associated group caps are produced, but only one is used at runtime.¶